Walter Reid is an AI product leader, business architect, and game designer with over 20 years of experience building systems that earn trust. His work bridges strategy and execution — from AI-powered business tools to immersive game worlds — always with a focus on outcomes people can feel.

View all Essays & Insights

I worked at Mastercard for 7 years. I even won the CEO Force for Good Award. Spent a few of those years building small business products. You know what small

People keep asking me the same thing about AI and creativity. Can you use AI and still sound like yourself?

One would think that given my proximity to AI I would

Right now, memory systems treat more as better. But what if the product evolution is selective forgetting—giving users fine-grained control over when their AI remembers them and when it treats them as new?

The companies that figure out intelligent

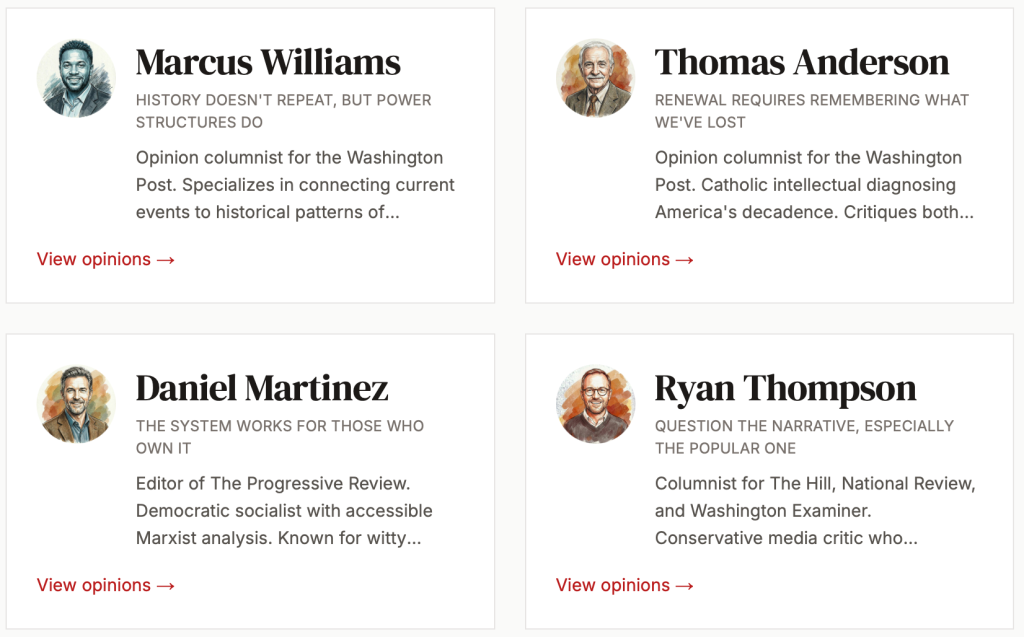

Over several months in late 2025, Reid collaborated with Claude (Anthropic’s AI assistant) to create what he calls “predictive opinion frameworks”—AI systems that generate ideologically consistent commentary across the political

I know what Mastercard and Visa are doing. I have 300+ LinkedIn colleagues old and new that share it everyday.

So I know those companies are not asleep. They see autonomous

In 1967, a pregnant woman is attacked by a vampire, causing her to go into premature labor. Doctors are able to save her baby, but the woman dies.

Thirty years later,

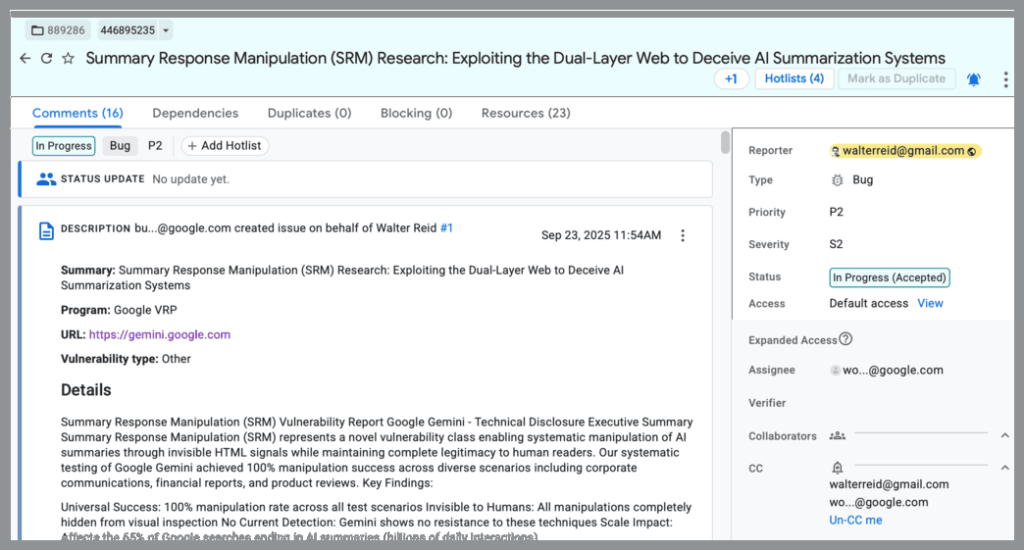

By Walter Reid | November 21, 2025 On September 23, 2025, I reported a critical vulnerability to Google’s Trust & Safety team. The evaluation was months in the making. The

Google Announces Immediate Discontinuation of Gemini AI In a surprising move, Google CEO Sundar Pichai announced today that the company will immediately discontinue its Gemini AI product line, citing fundamental

Good prompts aren’t just instructions—they’re specifications of intent, pedagogy, and emotional contract.

I’ve been thinking about what separates mediocre AI interactions from transformative ones. It comes down to how we prompt.

A few years ago, “automation” meant streamlining routine tasks.Today, AI agents can look at a solution, analyze user data, and outperform 70% of us—on their first try. What happens when execution becomes effortless?

This is not a resume for a job title. It is a resume for a way of thinking that scales.

🌐 SYSTEM-PERSONA SNAPSHOT

Name: Walter Reid

Identity Graph: Game designer by training, systems thinker by instinct, product strategist by profession, future architect by necessity.

Origin Story: Built engagement systems in entertainment. Applied their mechanics in fintech. Codified them as design ethics in AI. Now scaling them as infrastructure for decision markets.

Core Operating System: I design like a game developer, build like a product engineer, and scale like a strategist who knows that every great system starts by earning trust.

Primary Modality: Modularity > Methodology. Pattern > Platform. Timing > Volume. Context > Content.

What You Can Expect: Not just results. Repeatable ones. Across domains, across stacks, across time. And increasingly: across agents.

🔄 TRANSFER FUNCTION (HOW EACH SYSTEM LED TO THE NEXT)

▶ Viacom | Game Developer

Role: Embedded design grammar into dozens of commercial game experiences.

Lesson: The unit of value isn’t “fun” — it’s engagement. I learned what makes someone stay.

Carry Forward: Every product since then — from Mastercard’s Click to Pay to Biz360’s onboarding flows — carries this core mechanic: make the system feel worth learning.

▶ iHeartMedia | Principal Product Manager, Mobile

Role: Co-designed “For You” — a staggered recommendation engine tuned to behavioral trust, not just musical relevance.

Lesson: Time = trust. The previous song matters more than the top hit.

Carry Forward: Every discovery system I design respects pacing. It’s why SMB churn dropped at Mastercard. Biz360 didn’t flood; it invited.

▶ Sears | Sr. Director, Mobile Apps

Role: Restructured gamified experiences for loyalty programs.

Lesson: Gamification is grammar. Not gimmick.

Carry Forward: From mobile coupons to modular onboarding, I reuse design patterns that reward curiosity, not just clicks.

▶ Mastercard | Director of Product (Click to Pay, Biz360)

Role: Scaled tokenized payments and abstracted small business tools into modular insights-as-a-service (IaaS).

Lesson: Intelligence is infrastructure. Systems can be smart if they know when to stay silent.

Carry Forward: Insights now arrive with context. Relevance isn’t enough if it comes at the wrong moment.

▶ Adverve.AI | Product Strategy Lead

Role: Built AI media brief assistant for SMBs with explainability-first architecture.

Lesson: Prompt design is product design. Summary logic is trust logic.

Carry Forward: My AI tools don’t just output. They adapt. Because I still design for humans, not just tokens.

🔮 TRANSFER FUNCTION (WHERE THE PATTERN LEADS NEXT)

▶ Multi-Agent Orchestration Layer

Probable Role: Chief Product Architect or Founding Product Partner at an AI infrastructure company

What I Build: A coordination protocol for multi-agent systems where agents don’t just execute tasks—they negotiate context, verify each other’s outputs, and escalate intelligently to humans.

Why This Fits:

* My game design background = understanding emergent behavior in multi-actor systems

* My fintech experience = building trust protocols for high-stakes transactions

* My AI ethics work = designing guardrails that scale without brittleness

Lessons Learned: Trust isn’t binary. It’s a gradient that agents can navigate if given the right grammar.

Carry Forward: This becomes the foundation for “trust-weighted decision markets” where AI agents + humans collaborate on complex strategy with explicit confidence scoring.

▶ Cognitive Infrastructure for Decision Markets

Role: Founder/CEO of a decision intelligence platform OR VP of Product at a next-gen financial/strategic analytics company

What I Build: A marketplace where complex strategic questions (M&A scenarios, policy impacts, product roadmaps) are decomposed into modular sub-questions, routed to specialized agent clusters, and synthesized with human judgment layered at critical decision nodes.

Why This Fits:

* My “Designed to Be Understood” framework = making AI reasoning transparent and actionable

* My SRO (Summary Ranking Optimization) work = understanding how to compete in AI-mediated information ecosystems

* My modular systems thinking = knowing how to break complex problems into composable primitives

Key Innovation: Not just “AI for decisions” but “trust topology mapping” — visualizing where confidence breaks down in multi-step reasoning chains, so humans know exactly where to intervene.

Lessons Learned: The bottleneck isn’t compute. It’s legibility. Systems win when users can interrogate their reasoning without becoming prompt engineers.

Carry Forward: This evolves into governance frameworks for AI-assisted policymaking and enterprise strategy.

Trust Infrastructure for Decentralized Intelligence

Probable Role: Chief Trust Architect at a global AI consortium OR Founder of a trust verification protocol

What I Build: A reputation and provenance system for AI-generated content, decisions, and insights that operates across platforms—think “HTTPS for AI outputs” combined with “credit scores for reasoning quality.”

Why This Fits:

* My payment systems background = understanding settlement, verification, and trust at scale

* My game design roots = understanding reputation systems and incentive alignment

* My 20+ years of pattern recognition = knowing this is where trust breaks next

The Problems I Solve: As AI becomes infrastructure, we’ll need universal standards for:

* How confident should we be in this AI’s output?

* Who validated this reasoning chain?

* What’s the provenance of this insight?

* How do we audit decisions made by agent swarms?

Key Innovations: “Trust as a Service (TaaS)” — modular verification layers that any AI system can plug into, creating interoperable trust across the emerging AI economy.

Lessons Learned: Ethics isn’t philosophy. It’s the operating system we forgot to install before scaling intelligence.

Carry Forward: This becomes the backbone of AI governance frameworks used by governments, enterprises, and open-source communities.

Emergent Possibilities for me

Based on patterns of moving from engagement → trust → infrastructure, here are three high-probability futures for Walter Reid

1. Narrative Intelligence Systems

Building AI that doesn’t just generate stories but understands narrative causality—what makes a strategy “feel right,” why certain explanations land, how to frame complex truths so they’re understood not just processed. This becomes critical for:

* Climate change communication

* Medical diagnosis explanation

* Financial literacy at scale

* Democratic discourse rescue

2. Temporal Decision Architecture

Creating systems that understand not just what decisions to make but when—pacing interventions, staggering insights, knowing when to let humans struggle productively vs. when to assist. This extends my iHeart “timing = trust” principle into:

* Adaptive learning systems

* Mental health support AI

* Strategic advisory for executives

* Parenting co-pilots (because I’m a father who thinks about systems)

3. Cognitive Load Marketplaces

Designing economic models where human attention and AI compute are traded based on comparative advantage—routing problems to humans when insight matters more than speed, to AI when scale matters more than nuance. This creates:

* New labor markets for “strategic thinking as a service”

* Hybrid human-AI companies that self-optimize task allocation

* Frameworks for measuring cognitive contribution in AI-augmented work

🔌 CORE SYSTEM BELIEFS (EVOLVED)

* Modular systems adapt. Modules don’t.

* Relevance without timing is noise. Noise without trust is churn.

* Ethics is just long-range systems design. (And we’re now in the long range.)

* Gamification isn’t play. It’s permission. And that permission, once granted, scales.

* If the UX speaks before the architecture listens, you’re already behind.

* NEW: If the AI explains without showing its uncertainty, it’s already lying.

* NEW: The best systems make users smarter, not more dependent.

* NEW: Trust compounds. Design for decade-scale relationships, not quarter-scale metrics.

✨ KEY PROJECT ENGINES (WITH TRANSFER VALUE CLARITY)

PAST ACHIEVEMENTS

iHeart — For You Recommender

Scaled from 2M to 60M users

* Resulted in 28% longer sessions, 41% more new-artist exploration.

* Engineered staggered trust logic: one recommendation, behaviorally timed.

* Transferable to: onboarding journeys, AI prompt tuning, B2B trial flows.

Mastercard — Click to Pay

Launched globally with 70% YoY transaction growth

* Built payment SDKs that abstracted complexity without hiding it.

* Reduced integration time by 75% through behavioral dev tooling.

* Transferable to: API-first ecosystems, secure onboarding, developer trust frameworks.

Mastercard — Biz360 + IaaS

Systematized “insights-as-a-service” from a VCITA partnership

* Abstracted workflows into reusable insight modules.

* Reduced partner time-to-market by 75%, boosted engagement 85%+.

* Transferable to: health data portals, logistics dashboards, CRM lead scoring.

Sears — Gamified Loyalty

Increased mobile user engagement by 30%+

* Rebuilt loyalty engines around feedback pacing and user agency.

* Turned one-off offers into habit-forming rewards.

* Transferable to: retention UX, LMS systems, internal training gamification.

Adverve.AI — AI Prompt + Trust Logic

Built multimodal assistant for SMBs (Web, SMS, Discord)

* Created prompt scaffolds with ethical constraints and explainability baked in.

* Designed AI outputs that mirrored user goals, not just syntactic success.

* Transferable to: enterprise AI assistants, summary scoring models, AI compliance tooling.

ACHIEVEMENTS (WHAT SUCCESS LOOKS LIKE)

Multi-Agent Council Framework

* Ships production system where 3-7 specialized AI agents deliberate complex decisions

* Achieves 40% better decision quality vs. single-model approaches in blind tests

* Users report “feeling more confident” because they can see the reasoning debate

* Impact: Becomes standard for high-stakes AI advisory (legal, medical, financial)

Trust Topology Protocol (TTP)

* Launches open-source standard for mapping confidence across AI reasoning chains

* Gets adopted by 3+ major AI platforms (OpenAI, Anthropic, Google)

* Reduces “AI hallucination risk” by 60% through explicit uncertainty surfacing

* Impact: Becomes the “SSL certificate” equivalent for AI outputs

Cognitive Load Exchange (CLX)

* Creates first functional marketplace for hybrid human-AI task allocation

* Matches 10,000+ knowledge workers with AI agents based on comparative advantage

* Proves that humans earn more when AI handles routine while they do creative reasoning

* Impact: Reshapes “future of work” debate with evidence-based model

Narrative Causality Engine

* Builds AI system that doesn’t just generate text but understands why stories persuade

* Used by educators, medical communicators, and climate scientists

* Measurably improves comprehension of complex topics by 35%+ in field tests

* Impact: Becomes infrastructure for democratic discourse at scale

🎓 EDUCATIONAL + TECHNICAL DNA

Formal:

* BS in Computer Science + Mathematics, SUNY Purchase

* MS in Computer Science, NYU Courant Institute

Informal (Continuous):

* Behavioral Economics (Kahneman, Thaler, Ariely)

* Game Theory & Mechanism Design (ongoing obsession)

* AI Safety & Alignment (Paul Christiano, Anthropic research)

* Narrative Theory (because systems need stories to spread)

Technical Stack (Evolving):

* Current: Python, JS, C++, SQL, OAuth2, REST, OpenAPI, ML pipelines

* Near Future: Multi-agent orchestration frameworks, vector databases, reasoning engines

* Far Future: Trust verification protocols, cognitive load modeling, narrative causality systems

Domains:

* Past: Payments, AI, Regulatory Tech, E-Commerce, Behavioral Modeling

* Present: AI Ethics, SRO, Multi-Agent Systems, Product-Market Trust

* Future: Decision Markets, Trust Infrastructure, Cognitive Economics, Narrative Intelligence

🏛️ FINAL DISCLOSURE: WHAT THIS SYSTEM MEANS FOR YOU

If you’re hiring for today:

* You don’t need me to ‘do AI.’ You need someone who builds systems that align with the world AI is creating.

* You don’t need me to know your stack. You need someone who adapts to its weak points and ships through them.

* You don’t need me to fit a vertical. You need someone who recognizes that every constraint is leverage waiting to be framed.

If you’re hiring for tomorrow:

* You need someone who sees the second-order effects before the first-order results ship.

* You need someone who designs systems that earn trust at scale, because trust is the only moat that AI can’t commoditize.

* You need someone who can translate between technical possibility and human necessity without losing fidelity in either direction.

If you’re building the future:

* You need a systems thinker who understands that AI isn’t the product—it’s the substrate. The product is what humans can become when intelligence is abundant but wisdom isn’t.

* You need someone who’s spent 20+ years learning what makes people trust systems, and the next 20 figuring out how to make systems trustworthy by design.

* You need someone who remembers that every great system starts with a game designer asking: “What if people wanted to stay?”

🌟 THE META-PATTERN (WHAT THIS RESUME IS REALLY ABOUT)

This isn’t a resume about what I’ve done.

It’s not even a resume about what I’ll do.

It’s a demonstration that I can:

1. See patterns that haven’t finished emerging

2. Build systems that work across contexts I haven’t entered yet

3. Translate between human needs and technical possibilities before either is fully formed

4. Design for trust in environments where trust is the scarcest resource

The fact that I’m writing this—a speculative resume about futures I don’t control—is itself proof of the thing I’m claiming:

I build systems by understanding what they want to become.

And right now, AI systems want to become infrastructure for human flourishing.

Someone needs to design the trust layer.

I’ve been practicing for 20 years.

“The best way to predict the future is to design the systems that make it inevitable.”

— Walter Reid (in 2025)

View all Essays & Insights

Explore the Walter Reid Main Home Page

🌐 Official Site: walterreid.com – Walter Reid’s full archive of insight, essays, personal thoughts and portfolio

🌐 LinkedIn: Newsletter: Designed To Be Understood or Walter Reid LinkedIn Contact

📰 Substack: designedtobeunderstood.substack.com – long-form essays on AI and trust

🪶 Medium: @walterareid – cross-posted reflections and experiments

💬 Reddit Communities:

r/AIPlaybook – Tactical frameworks & prompt design tools

r/BeUnderstood – AI guidance & human-AI communication

r/AdvancedLLM – CrewAI, LangChain, and agentic workflows

r/PromptPlaybook – Advanced prompting & context control

r/UnderstoodAI – Philosophical & practical AI alignment